Research at Wikimania 2019: More communication doesn't make editors more productive; Tor users doing good work; harmful content rare on English Wikipedia: And other new research publications

A monthly overview of recent academic research about Wikipedia and other Wikimedia projects, also published as the Wikimedia Research Newsletter.

This year's Wikimania community conference in Stockholm, Sweden featured a well-attended Research Space, a 2.5-days track of presentations, tutorials, and lightning talks. Among them:

Enabling "easier direct messaging [on a wiki] increases... messaging. No change to article production. Newcomers may make fewer contributions", according to this presentation of an upcoming paper studying the effect of a "message walls" feature on Wikia/Fandom wikis that offered a more user-friendly alternative to the existing user talk pages. From the abstract:[1]

"[We examine] the impact of a new communication feature called “message walls” that allows for faster and more intuitive interpersonal communication in wikis. Using panel data from a sample of 275 wiki communities that migrated to message walls and a method inspired by regression discontinuity designs, we analyze these transitions and estimate the impact of the system’s introduction. Although the adoption of message walls was associated with increased communication among all editors and newcomers, it had little effect on productivity, and was further associated with a decrease in article contributions from new editors."

Presentation about a paper titled "Tor Users Contributing to Wikipedia: Just Like Everybody Else?", an analysis of the quality of edits that slipped through Wikipedia's general block of the Tor anonymizing tool. From the abstract:[2]

"Because of a perception that privacy enhancing tools are a source of vandalism, spam, and abuse, many user-generated sites like Wikipedia block contributions from anonymity-seeking editors who use proxies like Tor. [...] Although Wikipedia has taken steps to block contributions from Tor users since as early as 2005, we demonstrate that these blocks have been imperfect and that tens of thousands of attempts to edit on Wikipedia through Tor have been successful. We draw upon several data sources to measure and describe the history of Tor editing on Wikipedia over time and to compare contributions of Tor users to other groups of Wikipedia users. Our analysis suggests that the Tor users who manage to slip through Wikipedia's ban contribute content that is similar in quality to unregistered Wikipedia contributors and to the initial contributions of registered users."

See also our coverage of a related paper by some of the same authors: "Privacy, anonymity, and perceived risk in open collaboration: a study of Tor users and Wikipedians"

"Supporting deliberation and resolution on Wikipedia" - presentation about the "Wikum" online tool for summarizing large discussion threads and a related paper[3], quote:

"We collected an exhaustive dataset of 7,316 RfCs on English Wikipedia over the course of 7 years and conducted a qualitative and quantitative analysis into what issues affect the RfC process. Our analysis was informed by 10 interviews with frequent RfC closers. We found that a major issue affecting the RfC process is the prevalence of RfCs that could have benefited from formal closure but that linger indefinitely without one, with factors including participants' interest and expertise impacting the likelihood of resolution. [...] we developed a model that predicts whether an RfC will go stale with 75.3% accuracy, a level that is approached as early as one week after dispute initiation. [...] RfCs in our dataset had on average 34.37 comments between 11.79 participants. As a sign of how unwieldy these discussions can get, the highest number of comments on an RfC is 2,375, while the highest number of participants is 831."

The research was presented in 2018 at the CSCW conference and at the Wikimedia Research Showcase. See also press release: "Why some Wikipedia disputes go unresolved. Study identifies reasons for unsettled editing disagreements and offers predictive tools that could improve deliberation.", dataset, and our previous coverage: "Wikum: bridging discussion forums and wikis using recursive summarization".

See our 2016 review of the underlying paper: "A new algorithmic tool for analyzing rationales on articles for deletion" and related coverage

Presentation about ongoing survey research by the Wikimedia Foundation focusing on reader demographics, e.g. finding that the majority of readers of "non-colonial" language versions of Wikipedia are monolingual native speakers (i.e. don't understand English).

A presentation about an ongoing project to analyze the usage of citations on Wikipedia highlighted this result among others.

See last month's OpenSym coverage about the same research.

About the "Wikipedia Insights" tool for studying Wikipedia pageviews, see also our earlier mention of an underlying paper.

The presentation "Understanding content moderation on English Wikipedia" by researchers from Harvard University's Berkman Klein Center reported on an ongoing project, finding e.g. that only about 0.2% of revisions contain harmful content, and concluding that "English Wikipedia seems to be doing a pretty good job [removing harmful content - but:] Folks on the receiving end probably don't feel that way."

Presentation about "on-going work on English Wikipedia to assist checkusers to efficiently surface sockpuppet accounts using machine learning" (see also research project page)

Demonstration of "Wiki Atlas, [...] a web platform that enables the exploration of Wikipedia content in a manner that explicitly links geography and knowledge", and a (prototype) augmented reality app that shows Wikipedia articles about e.g. buildings.

Presentation of ongoing research detecting subject matter experts among Wikipedia contributors using machine learning. Among the findings: Subject matter experts concentrate their activity within a topic area, focusing on adding content and referencing external sources. Their edits persist 3.5 times longer than those of other editors. In an analysis of 300,000 editors, 14-32% were classified as subject matter experts.

The presentation "Improving Knowledge Base Construction from Robust Infobox Extraction" about a paper already highlighted in our July issue explained a method used to ingest facts from Wikipedia infoboxes into the knowledge base underlying Apple's Siri question answering system. The speaker noted the decision not to rely solely on Wikidata for this purpose, because Wikipedia still offers richer information than Wikidata - especially on less popular topics. An audience member asked what Apple might be able to give back to the Wikimedia community from this work on extracting and processing knowledge for Siri. The presenter responded that publishing this research was already the first step, and more would depend on support from higher-ups at the company.

From the abstract of the underlying paper:[4][5]

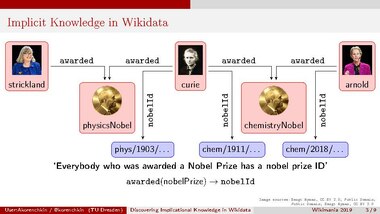

"A distinguishing feature of Wikidata [among other knowledge graphs such as Google's "Knowledge Graph" or DBpedia] is that the knowledge is collaboratively edited and curated. While this greatly enhances the scope of Wikidata, it also makes it impossible for a single individual to grasp complex connections between properties or understand the global impact of edits in the graph. We apply Formal Concept Analysis to efficiently identify comprehensible implications that are implicitly present in the data. [...] We demonstrate the practical feasibility of our approach through several experiments and show that the results may lead to the discovery of interesting implicational knowledge. Besides providing a method for obtaining large real-world data sets for FCA, we sketch potential applications in offering semantic assistance for editing and curating Wikidata."

See last month's OpenSym coverage about the same research

The now traditional annual overview of scholarship and academic research on Wikipedia and other Wikimedia projects from the past year (building on this research newsletter). Topic areas this year included the gender gap, readability, article quality, and measuring the impact of Wikimedia projects on the world. Presentation slides

See the the page of the monthly Wikimedia Research Showcase for videos and slides of past presentations.

Other recent publications that could not be covered in time for this issue include the items listed below. Contributions, whether reviewing or summarizing newly published research, are always welcome.

In search tasks in Wikipedia, people who are in unpleasant moods tend to issue more queries and perceive higher level of difficulty than people in neutral moods.[6]

This paper, titled "Building a Knowledge Graph for Recommending Experts",[7] describes a method to build a knowledge graph by integrating data from Google Scholar and Wikipedia to help students find a research advisor or thesis committee member.

From the abstract:[8]

"The Wikipedia category graph serves as the taxonomic backbone for large-scale knowledge graphs like YAGO or Probase, and has been used extensively for tasks like entity disambiguation or semantic similarity estimation. Wikipedia's categories are a rich source of taxonomic as well as non-taxonomic information. The category 'German science fiction writers', for example, encodes the type of its resources (Writer), as well as their nationality (German) and genre (Science Fiction). [...] we introduce an approach for the discovery of category axioms that uses information from the category network, category instances, and their lexicalisations. With DBpedia as background knowledge, we discover 703k axioms covering 502k of Wikipedia's categories and populate the DBpedia knowledge graph with additional 4.4M relation assertions and 3.3M type assertions at more than 87% and 90% precision, respectively."

This paper[9] describes a system to generate Spanish-Basque and English-Irish translations for image captions in Wikimedia Commons.

About an abuse detection model that leverages Natural Language Processing techniques, reaching an accuracy of ∼85%.[10] (see also research project page on Meta-wiki, university page: "Of Trolls and Troublemakers", research showcase presentation)

A method to infer edit quality directly from the edit's textual content using deep encoders, and a novel dataset containing ∼ 21M revisions across 32K Wikipedia pages.[11]

From the abstract:[12]

"we focus on the problem of interlinking Wikipedia tables for two types of table relations: equivalent and subPartOf. [...] We propose TableNet, an approach that constructs a knowledge graph of interlinked tables with subPartOf and equivalent relations. TableNet consists of two main steps: (i) for any source table we provide an efficient algorithm to find all candidate related tables with high coverage, and (ii) a neural based approach, which takes into account the table schemas, and the corresponding table data, we determine with high accuracy the table relation for a table pair. We perform an extensive experimental evaluation on the entire Wikipedia with more than 3.2 million tables. We show that with more than 88\% we retain relevant candidate tables pairs for alignment. Consequentially, with an accuracy of 90% we are able to align tables with subPartOf or equivalent relations. "

From the abstract and conclusions:[13]

"we believe an important advancement in the outlook of knowledge graph development is the emergence of Wikidata as an identifier broker and as a scoping tool. [...] To unite our data silos in biodiversity science, we need agreement and adoption of a data modelling framework. A knowledge graph built using RDF, supported by an identity broker such as Wikidata, has the potential to link data and change the way biodiversity science is conducted.

From the abstract:[14]

"The paper summarizes our research in the area of unsupervised categorization of Wikipedia articles. As a practical result of our research, we present an application of spectral clustering algorithm used for grouping Wikipedia search results. The main contribution of the paper is a representation method for Wikipedia articles that has been based on combination of words and links and used for categoriation of search results in this repository. "

From the abstract:[15]

"This paper presents preliminary results from an empirical experiment of oral information collection in rural Namibia converted into citations on Wikipedia. The intention was to collect information from an indigenous group which is currently not derivable from written material and thus remains unreported to Wikipedia under its present rules. We argue that a citation to an oral narrative lacks nothing that one to a written work would offer, that quality criteria like reliability and verifiability are easily comparable and ascertainable. On a practical level, extracting encyclopaedic like information from an indigenous narrator requires a certain amount of prior insight into the context and subject matter to ask the right questions. Further investigations are required to ensure an empirically sound approach to achieve that."

From the abstract:[16]

"With an under-represented contribution from Global South editors and especially indigenous communities, Wikipedia, aiming at encompassing all human knowledge, falls short of indigenous knowledge representation. A Namibian academia community outreach initiative has targeted rural schools with OtjiHerero speaking teachers in their efforts to promote local content creation, yet with little success. Thus this paper reports on the effectiveness of value sensitive persuasion to encourage Wikipedia contribution of indigenous knowledge. Besides a significant difference in values between the indigenous community and Wikipedia we identify a host of conflicts that might be hampering the adoption of Wikipedia by indigenous communities."

{{cite journal}}: Cite journal requires |journal= (help)

Discuss this story

Regarding the one in every 200 pageviews leading to a citation ref click, that's surprisingly low given the editor-side emphasis on WP:RS. It would be good to see more students being explicitly taught information literacy and best practices specifically for reading Wikipedia. The nearest reader-side resources I know of within Wikipedia are Help:Wikipedia:_The_Missing_Manual/Appendixes/Reader's_guide_to_Wikipedia and Wikipedia:Research_help don't really cover the relevant topics e.g. why and how to check the references. T.Shafee(Evo&Evo)talk 01:33, 1 November 2019 (UTC)[reply]

"All Talk" flaws

This study may have measured the wrong things. The goal was assumed to be "productivity" as defined by article contributions, but there was no theoretical justification given for this decision. Currently, the best model for what we want to see happen for newcomers is that their first edits aren't reverted, and that the editors stick around and stay active for a long time. See meta:Research:New_editors'_first_session_and_retention. Adamw (talk) 13:39, 5 November 2019 (UTC)[reply]

Characterizing Reader Behavior on Wikipedia

Anyone else notice the striking statistic that over 1/3rd of English Wikipedia readers are not native English speakers? I'm a native English speaker and have a hard time reading a lot of our articles (due to the high prevalence of jargon, overly complex run-on sentences, and mangled grammar). Perhaps we should make more of an effort to make our articles readable rather than trying (poorly) to make them sound academic and erudite. Kaldari (talk) 15:30, 10 November 2019 (UTC)[reply]

Image for Wikidata item

Is there a particular point in using an image that shows only women to illustrate the Wikidata item about knowledge graphs? I don't want to make any assumptions here, other than that it seems obvious that someone (singular or plural) made the decision and had reasons for it. – Athaenara ✉ 19:15, 26 November 2019 (UTC)[reply]