This piece examines a key question that new Wikimedia projects such as Wikidata are concerned with: how to properly represent knowledge digitally at the most basic level. There is a real danger that an inflexible, proscriptive approach to data will severely limit the scope, capabilities and ultimate utility of the resulting service.

At one level, the textual representation of information and knowledge in books and online can be viewed as simply another serialisation and packaging format for information and knowledge, optimised for human rather than machine consumption. Within the Wikipedia community – Wikidata and elsewhere – there is a perceived utility in using more structured, machine-friendly formats to enable better information sharing and computer-assisted analysis and research. However, there remains a lot of debate about the best approach, to which I will contribute the views I have developed over nearly a decade of research and development projects at the Bodleian Library[1] and before that, through my involvement with knowledge management in the commercial domain.

Secondly, there will be no single unifying metadata "standard" (or even a few such standards), so deal with it! For example, biosharing.org lists just under 200 metadata standards for experimental biosciences alone. The notion of a single standard that led to the development of MARC, and latterly RDA, in the library sphere is simply not applicable to the way in which metadata is now used within the field of academic enquiry. This means that any solution to handling digital objects must have a mechanism for handling a multiplicity of standards, and ideally within an individual object – for example, bibliographic, rights and preservation metadata may quite reasonably be encoded using different standards.[3] The corollary of this is that if we have such a mechanism there is no need to abandon existing standards prematurely. This avoidance of over-proscribing and premature decision-making will be familiar to Agile developers. Consequently, Wikidata developers would be ill-advised to aim for a rigid, unitary metadata model – even at a basic level, representing knowledge is too complex and variable for such an approach.

So how do we balance this proliferation of standards with the desire for sharing and interoperability? We can find several key areas in which a consensus view is emerging, not through explicit standard-setting activities but through experience and necessity. This gives us a good indication that these are sensible points on which to base longer-term interoperability.

These common properties are obviously very amenable to storage and manipulation in a relational database. Indeed, for large-scale data ingestion with the following clean-up, de-duplication and merging of records/objects, this is likely to be the best tool for the job. However, once this task has been completed and we delve into the more varied elements of the objects, the advantages of a purely relational database approach are less clear-cut.

Instead, we can treat each object as an independent, web-addressable entity – which in practice is desirable in its own right as a mode of publication and dissemination. In particular, we can use search engines to index across heterogeneous fields – Apache Solr excels at faceting and grouping, while ElasticSearch can index arbitrary XML without schemas (i.e. all of the varied domain-specific metadata). These tools give users ways into the material that are much easier to use and more intuitive.

The objects alone are only a part of the picture – the relationships between objects are critical to the structure of the overall collection. In fact, in many cases (especially in the humanities) a significant proportion of research activity actually involves discovering, analysing and documenting such relationships. The Semantic Web or, more precisely, the ideas behind the Resource Description Framework (RDF) and linked data, provide a mechanism for expressing these relationships in a way that is structured, through the use of defined vocabularies, but also flexible and extensible, through the ability to use multiple vocabularies. While theoretically it is possible to express all metadata in RDF, this is not practical for performance[5] and usability[6] reasons, and is unnecessary.

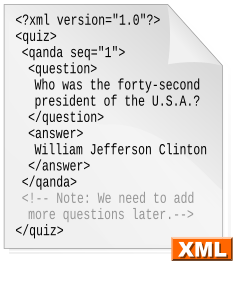

This model of linked data, combining a mix of standardised fields and less-structured textual content, should not be entirely unfamiliar to people used to working with Semantic MediaWiki, sharing their metadata on Wikidata, or using data boxes in Wikipedia! However, when applying this model to practical research projects it emerges that a critical element is still lacking. Although we can describe relationships between objects using RDF, we are limited to making assertions of the form <subject><predicate/relationship><object> (the RDF "triple"). In practice, relatively few statements of this form can be considered universally and absolutely true. For example: a person may live at a particular address but only for a certain period of time; the copyright on a book may last for 50 years, but only in a particular country. Essentially, what is needed is a mechanism to define the circumstances under which a relationship can be considered valid. A number of possible mechanisms could do this – replacing RDF triples with "quads" that include a context object; annotation of relationships using OAC.

These examples are really just special cases of a more general requirement that is of great interest to scholars. This is the ability to qualify a relationship or assertion to capture an element of provenance. Specifically, we need to know who made an assertion, when, on the basis of what evidence, and under which circumstances it holds. This may be manifested in several ways:

These qualifications become especially important when we try to use computational tools such as analytics and visualisation. Indeed, projects such as Mapping the Republic of Letters (Stanford University) are expending significant effort to find ways of representing uncertainty and omission in visualisations.

I believe there needs to be a subtle change in the mindset when creating reference resources for scholarly purposes (and, arguably, more generally). Rather than always aiming for objective statements of truth we need to realise that a large amount of knowledge is derived via inference from a limited and imperfect evidence base, especially in the humanities. Thus we should aim to accurately represent the state of knowledge about a topic, including omissions, uncertainty and differences of opinion.

Discuss this story