In March 2009, a new extension was enabled on the English Wikipedia: the abuse filter (see previous Signpost coverage). However, at least on the English Wikipedia it was soon renamed as the Edit filter to avoid implicitly labelling edits that are merely incorrect as "abuse". The form of the filter has remained largely unchanged. The broad power it offered to deal conclusively with whole patterns of vandalism at once was very appealing to administrators on a number of projects: since March 2009, another 65 wikis have asked for the extension to be enabled for their community. Now the service is to be enabled by default for Wikimedia wikis, the Foundation has announced, extending it to all 843 wikis under the Foundation's guidance (WMF blog).

The move is primarily designed to free up the time of developers, who have otherwise had to process the dozens of access requests separately, and the time of local admins, who no longer have to wait for it to be deployed (foundation-l mailing list). Reaction to the announcement has been mixed: several users (MZMcBride, Nemo, wjhonson) expressed concern that, in its present state, the extension may allow rouge admins on smaller wikis to quietly silence alternative points of view to their own in content disputes. The issue of terminology was also raised; MZMcBride favoured a global rename of the extension's language along the lines of that already used on the English Wikipedia to avoid stigmatising users. There has also been support for the Foundation's decision, however. Commenting on the news, User:NawlinWiki said that the edit filter was a success story, helping to "stop certain types of pattern vandalism that were previously very difficult to deal with". With regard to the learning curve faced by operators on other wikis, he pointed to the ability to copy filters between wikis, allowing other communities to benefit from the experiences of major wikis such as the English Wikipedia.

Since the extension comes with no filters by default, there will be no immediate noticeable difference. In addition to the filtering of edits, the extension is also responsible for tagging edits.

The Wikimedia Foundation's report for July has been published on meta-wiki. It reports that visitor numbers are down compared to earlier in the year, but still exceed figures from the same period last year, whilst both income and expenditure were higher than expected for various reasons, including the rescheduling of grant payments. The report also confirms that the Foundation has been struggling to hire new employees at the rate it had intended to; the Foundation's human resources department put this down to its focus on quality, and said that it would "need to stay focused and engaged to make [its] target of 40 more hires".

The report also draws together the activity of the Foundation's many other sub-departments, much of which was reported during July by The Signpost (which is itself linked in the report), such as the month's Engineering Report and Wikimedia's defence of its trademark in a recent WIPO case, described in the report as a "strong [decision], useful in future challenges". Also mentioned is a new, Foundation-sponsored "student clubs" project, which the report describes as a success: there has "already [been] much uptake in clubs forming virally throughout the world". The Foundation is now working on drawing up a model agreement on trademark usage and template materials for potential student groups.

The Wikimedia Foundation has published a report (also discussed on the Foundation blog) summarising the results of the editor survey conducted in April. Interesting findings include demographic data showing that the stereotypical image of the Wikipedian as a twenty-something graduate student geek may be less accurate than previously thought. The Board of Trustees election was unfamiliar to 45% of editors, and 46% of editors did not know whether there was a Wikimedia chapter in their country. Some findings had already been published earlier in a series of blog posts (see e.g. Signpost coverage: "First results of editor survey: Wikipedians 90% male, 71% altruist", "Further data from editor survey", 2011 editor survey: Board of Trustees elections").

Only 8% of respondents were women and women contributors generally had a lower number of edits, but the number of women editors is growing and the number of women reporting issues with stalking and other negative behavior is only 5% of the total number of women editors. There is plenty of good news: editors that get positive feedback tend to edit more, and editors report getting more positive than negative feedback. Interestingly, over half of contributors edit Wikipedias in more than one language and 72% of contributors read Wikipedia in more than one language.

The report touches on the growing importance of mobile access to Wikipedia with high numbers of people reporting having a mobile phone (84%), but significantly, only 38% have a smartphone.

A number of other wikis also reached milestone user number totals. For example, the Swahili Wikipedia has reached 10,000 registered users, double the number reported by The New York Times in January of last year in a report on the Google-assisted efforts at developing the project. Also passing milestones were the Burmese Wikipedia, the Waray-Waray Wikipedia, and the Kazakh Wikipedia, who all also surpassed 10,000 registered users this month.

Although Wikipedia explicitly identifies itself as not a source of news, it is often updated rapidly to reflect current events (for example, its coverage of the 2010 Haiti earthquake received a favourable writeup from traditional news media). As a result, Wikipedia articles were appearing on the front page of news aggregation service Google News as early as June 2009, and often receive large page view spikes when news breaks. This was also the case on Tuesday, with an article on the 2011 Virginia earthquake springing up within minutes of the event, and with Hurricane Irene, whose article grew from nothing to over 100kB as the hurricane approached the city of New York in the latter half of the week.

Such rapid growth attracted its own media attention. The Washington Post dedicated a story to the English Wikipedia's article on the Virginia earthquake, noting that "Wikipedians needed just eight minutes to cooly consign the '2011 Virginia earthquake' to history". The Post's coverage was positive, appearing to praise the encyclopedic, historical tone of the coverage, the quick reversion of vandalism to the article, and the merging of another article on the same event. Online news site The Daily Dot looked instead at the Hurricane Irene article, including favourable quotations from editors to the page who explained the difficulties of editing such a popular article.

Wikinews also has articles on the events: Tropical Storm Irene passes over New York and Magnitude 5.8 earthquake in Virginia felt up and down U.S. east coast. In addition to specific coverage of the Wikipedia articles, a number of news organisations quoted Wikipedia articles for facts and figures on the events and similar ones from history.

Pulling no punches, David Swindle kicked off a series of articles for FrontPageMag, a conservative website based in California, that analyses the political slant of Wikipedia, proposing to show "How the left conquered Wikipedia". He does this firstly by comparing specific articles from opposite sides of the US political spectrum, and showing how in each pairing the "liberal" personality or organisation receives a more favourable write-up (he does not appear, however, to have attempted a systematic analysis of all pages from each side). In articles that appear well-referenced, he also notes the low percentage of sources used in these articles that he would characterise as "conservative", compared with the relatively high percentage of "liberal" sources. Swindle adds that articles on "leftists" may include controversy, but only where the subject has apologised for his or her error, thus "transforming a failing into a chance to show the subject's humanity".

After a brief interlude discussing the vulnerability of Wikipedia to high-profile BLP-attacks and the real life damage they may cause, Swindle returns to his central thesis of a liberal bias in Wikipedia, attributing it to the characteristics of the average Wikipedia editor (whom he describes as "alone and apparently without a meaningful, fulfilling career"). "Unfortunately, Wikipedia, because of its decision to create an elite group of 'information specialists', has picked its side in [political battles] and is now fighting on the front lines", writes Swindle. As for future essays, the commentator advises that he will demonstrate how "the bias in entries for persons no longer living and historical subjects is less marked and, when present, more subtle".

This is not the first time Wikipedia has been accused of having a liberal bias, the issue has been raised most notably by Andrew Schlafly who asserted that "Wikipedia is six times more liberal than the American public" and started his own website Conservapedia to be a conservative alternative. Last year, FrontPageMag already described Wikipedia as "an Islamist hornet’s nest" (Signpost coverage: "Wikipedia accused of 'Islamofascist dark side'"), and had one author explain her negative experiences while editing Wikipedia by the hypothesis that "Wiki has an Israel problem. Wiki has a Jewish problem" (Signpost coverage: "Wikipedia downplaying the New York Times' anti-semitism?").

A team of researchers from the MIT Center for Collective Intelligence investigated the structure of collaboration networks in Wikipedia and their performance in bringing articles to higher levels of quality. The study,[1] presented at HyperText 2011, defines collaboration networks by considering editors who edited the same article and exchanged at least one message on their respective talk pages. The authors studied whether a pre-existing collaboration network or structured collaboration management via WikiProjects accelerate the process of quality promotion of English Wikipedia articles. The metric used is the time it takes to bring articles from B-class to GA or from GA to FA on the Wikipedia 1.0 quality assessment scale. The results show that the WikiProject importance of an article increases its promotion rate to GA and FA by 27% and 20%, respectively. On the other hand, the number of WikiProjects an article is part of reduces the rate of promotion to FA by 32%, an effect that the authors speculated could imply that these articles are broader in scope than those claimed by fewer WikiProjects. Pre-existing collaboration also dramatically affects the rate of promotion to GA and FA (with 150% and 130% increases, respectively): prior collaborative ties significantly accelerate article quality promotion. The authors also identify contrasting effects of network structure (cohesiveness and degree centrality) on the increase of GA and FA promotion times.

The paper "Wikipedia and institutional repositories: an academic symbiosis?"[3] is concerned with Wikipedia articles citing primary sources when suitable secondary ones (as per WP:SCHOLARSHIP) are not available. Only about 10% of scholarly papers are published as open access, but another 10% are freely available through self-archiving, thus doubling in practice the number of scholarly primary resources that Wikipedia editors have at their disposal. The article describes a sample of institutional repositories from the major higher-education institutions in New Zealand, along with three Australian institutions serving as controls, and analyses the extent to which they are linked from Wikipedia (across languages).

Using Yahoo! Site Explorer, a total of 297 links were estimated to go from Wikipedia articles to these repositories (40% of which went to the three Australian controls), mostly to support specific claims but also (in 35% of the cases) for background information. In terms of document type linked from Wikipedia, PhD theses, academic journal articles and conference papers each scored about 20% of the entries, whereas in terms of Wikipedia language, 35% of links came from non-English Wikipedias.

The paper cites strong criticism of institutional repositories[4] but proposes "a potential symbiosis between Wikipedia and academic research in institutional repositories" – Wikipedia getting access to primary sources, and institutional repositories growing their user base – as a new reason that "academics should be systematically placing their research work in institutional repositories". Ironically, the author himself did not follow this advice. However, such potential alignments between Wikimedians and open access have been observed in related contexts – according to the expert participation survey. For instance, Wikipedia contributors are more likely to have most or all of their publications freely available on the web.

As is custom in academia, the paper does not provide links to the underlying data, but the Yahoo! Site Explorer queries can be reconstructed (archived example) or compared to Wikipedia search results and site-specific Google searches. There is also code from linkypedia and the Wikipedia part of the PLoS Altmetrics study that could both be adapted for automating such searches.

In an article titled "Information quality assessment of community generated content: A user study of Wikipedia" (abstract),[5] published this month by the Journal of Information Science, three researchers from Bar-Ilan University reported on a study examining judgment of Wikipedia's quality by non-expert readers (done as part of the 2008 doctoral thesis of one of the authors, Eti Yaari, which was already covered in Haaretz at the time).

The paper starts with a review of existing literature on information quality in general, and on measuring the quality of Wikipedia articles in particular. The authors then describe the setup of their qualitative study: 64 undergraduate and graduate students were each asked to examine five articles from the Hebrew Wikipedia, as well as their revision histories (an explanation was given), and judge their quality by choosing the articles they considered best and worst. The five articles were pre-selected to include one containing each of four quality/maintenance templates used on the Hebrew Wikipedia: featured, expand, cleanup and rewrite, plus one "regular" article. But only half of the participants were shown the articles with the templates. Participants were asked to "think aloud" and explain their choices; the audio recording of each session (on average 58 minutes long) was fully transcribed for further analysis, which found that the criteria mentioned by the students could be divided into "measurable" criteria "that can be objectively and reliably assigned by a computer program without human intervention (e.g. the number of words in an article or the existence of images)" and "non-measureable" ones ("e.g. structure, relevance of links, writing style", but also in some cases the nicknames of the Wikipedians in the version history). Interestingly, a high number of edits was both seen as a criterion indicating good quality by some, and indicating bad quality by others, and likewise for a low number of edits.

Comparing the quality judgments of the study's participants with that of Wikipedians as expressed in the templates revealed some striking differences: "The perceptions of our users regarding quality did not always coincide with the perceptions of Wikipedia editors, since in fewer than half of the cases the featured article was chosen as best". In fact, "in three cases, the featured article was chosen as the lowest quality article out of the five articles assessed by these participants." However, those participants who were able to see the templates chose the "featured" article considerably more often as the best one, "even though the participants did not acknowledge the influence of these templates".

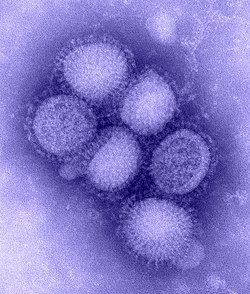

A paper published in Health Communication examined "Public Anxiety and Information Seeking Following the H1N1 Outbreak" (of swine influenza in 2009) by tracking, among other measures, page view numbers on Wikipedia, which it described as "a popular health reference website" (citing a 2009 paper co-authored by Wikipedian Tim Vickers: "Seeking health information online: Does Wikipedia matter?"[6]). Specifically, the researchers - psychologists from the University of Texas and the University of Auckland - selected 39 articles related to swine flu (for example, H1N1, hand sanitizer, and fatigue) and examined their daily page views from two weeks prior to two weeks after the first announcement of the H1N1 outbreak during the 2009 flu pandemic. (The exact source of the page view numbers is not stated, but a popular site providing such data exists.) Controlling for variations per day of the week, they found that "the increase in visits to Wikipedia pages happened within days of news of the outbreak and returned to baseline within a few weeks. The rise in number of visits in response to the epidemic was greater the first week than the second week .... At its peak, the seventh day, there were 11.94 times as many visits per article on average."

While these findings may not be particularly surprising to Wikipedians who are used to current events driving attention for articles, the authors offer intriguing comparisons to the two other measures of public health anxiety they study in the paper: The number of newspaper articles mentioning the disease or the virus, and the number of blog entries mentioning the disease. "Increased attention to H1N1 happens most rapidly in Wikipedia page views, then in the blogs, and finally in newspapers. The duration of peak attention to H1N1 is shortest for the blog writers, followed by Wikipedia viewers, and is longest in newspapers." Examining correlations, they found that "The number of blog entries was most strongly related to the number of newspaper articles and number of Wikipedia visits on the same day. The number of Wikipedia visits was most strongly related to the number of newspaper articles the following day. In other words, public reaction is visible in online information seeking before it is visible in the amount of newspaper coverage." Finally, the authors emphasize the advantages of their approach to measure public anxiety in such situations over traditional approaches. Specifically, they point out that in the H1N1 case the first random telephone survey was conducted only two weeks after the outbreak, and therefore underestimated the initial public anxiety levels, as the author argue based on their combined data including Wikipedia pageviews.

A paper by researchers of GroupLens Research to be presented at the upcoming WikiSym 2011 symposium offers the most comprehensive analysis of gender imbalance in Wikipedia so far.[7] This study was covered by a summary in the August 15 Signpost edition and, facilitated by a press release, it generated considerable media attention. Below are some of the main highlights from this study:

A second, unpublished paper addressing gender imbalance in Wikipedia ("Gender differences in Wikipedia editing") by Judd Antin and collaborators will be presented at WikiSym 2011.

In a paper titled "The Behavior of Wiki Users",[8] appearing in Social Behavior and Personality: An International Journal, two researchers from Taiwan used the Unified Theory of Acceptance and Use of Technology (UTAUT) "to explain why people use wikis", based on an online questionnaire distributed in July 2010 in various venues and to Wikipedians in particular. According to an online version of the article, the survey generated 243 valid responses from the Chinese-speaking world, which showed that – similar to previous results for other technologies – each of the following "had a positive impact on the intention to use wikis":

The impact of user involvement was the most significant. Social influence (e.g. "The people around me use wikis") was not found to play a significant role. On the other hand, the researchers state that a person's general susceptibility to the "bandwagon effect" (measured by statements such as "I often follow others' suggestions") "can intensify the impact of [an already present] intention to use wikis on the actual use ... This can be explained in that users tend to translate their intention to use into actual usage when their inclination receives positive cues, but the intention alone is not sufficient for them to turn intention into action. ... people tend to be more active in using new technology when social cues exist. This is especially true for societies where obedience is valued, such as Taiwan and China."

The following is an op-ed by Beeblebrox, an established editor, administrator, and oversighter on the English Wikipedia. He writes of his experiences with the trial of pending changes in 2010 and in assessing its results. Pending changes is a review system that prevents certain edits from being publicly visible until they are approved by another editor. The system temporarily used on the English Wikipedia was a modified form of the system in use on a number of other Wikimedia projects; nonetheless, because it altered the fundamental editing process in an attempt to stem abuses, it attracted both supporters and opponents in large numbers. For a full list of pages connected to the trial, see Template:Pending changes trial. The views expressed are those of the author only.

The Signpost welcomes proposals for op-eds. If you have one in mind, please leave a message at the opinion desk.

They say the road to hell is paved with good intentions. I had good intentions, and they led me straight into Wikipedia Hell. As most of you know, pending changes (PC) was a modified version of the "flagged revision" system used on other Wikipedia projects. It was deployed here as a trial: the trial period expired and ... nothing happened. That's where I come in.

I had applied PC to a few dozen articles during and after the trial period. I got a message on my talk page from a user who noted that I was still using it even though the trial period was over. I didn't think I was doing anything drastic, but it was still bothering some folks because there was no clear mandate to continue using the tool. I'd participated in a number of policy discussions in the past, so I took it upon myself to seek an answer to the question of whether we wanted to retain this tool or not. Six months later, the question remains unanswered.

It started with a simple request for comment ([2]). I really didn't know what to expect. I knew the original discussions had been heated, and that many people had believed this tool would create more class divisions on Wikipedia; but after the trial began, the furor seemed to have died down. My goal was to come up with a yes or no answer as to whether we should use the tool, but in retrospect it was naive of me to think it would be that simple. I opened the discussion on February 16. By the 19th it had grown into a long, disjointed conversation on a myriad of topics. There were many misunderstandings, and a lot of confusion regarding who was supposed to do what when the trial ended. That appears to be where this whole thing went wrong. Everyone was angry that nothing was being done, but nobody seemed to know definitively who was supposed to be doing what in the first place.

Things were getting a bit out of control as discussions were duplicated and new participants added new sections without apparently having read previous posts. On March 8 the second phase of the RFC began. The rate of participation was high, and disruption and factionalism were low. However, a small (it seemed to me) but very vocal group of users felt that we shouldn't have a conversation about whether to keep it until it was turned off. Gradually, this became the primary topic of discussion on the talk page. Contributors began to split into two camps: editors who wanted the tool turned off and those of us who felt this was irrelevant. I was dismayed by what I saw as the emergence of an adversarial relationship. The waters were becoming muddied and an unpleasantly confrontational atmosphere was developing on the talk page: a storm was brewing.

To try once again to organize discussion into a format that would yield usable results, I proposed yet another phase. The idea would be a survey for editors to complete. I'd participated in the Wikipedia:RfA Review/Recommend survey and liked the format. I believed this issue was not as contentious as RfA, and that we could use the combined results of the three phases to determine what the community wanted and move forward. I still believe that.

I tried to roll out the third phase. I asked for feedback on it, but got very little. Eventually it was clear the increasingly vocal users who wanted to switch off PC didn't like the existence of the third phase. For my part, while I didn't "own" the RFC I did feel it should focus on the particular purpose for which I'd created it: to determine whether or not we should continue to use PC. How could we craft a policy on the use of a tool if we couldn't even decide if we would use it, and how could we expect the Foundation to expend its resources to develop it if we were unable to tell them if it would end up being used? I decided to push ahead as only a few users out of the 100+ who had participated in earlier phases had objected to the final phase. The breakdown of what happened in phase two suggested that we had some fairly usable results, and I didn't want to lose the momentum we had. I wanted to get this over the finish line and answer what I was now calling "The Big Question".

I turned on the questionnaire phase after ten days of discussion that had resulted in changes to both the wording and ordering of the questions. Nobody had proposed an alternative procedure other than reverting back to open discussion, which had already proved to be too messy to yield any usable results in my view. Was I being pushy? Maybe, but I felt it was important to resolve this issue, which had by then been discussed for more than a month.

Two questionnaires had been submitted when a user decided to revert phase three and place it on hold pending further discussion. For the first time, I was actually feeling stress and getting angry about something on Wikipedia. I am usually able to keep my cool fairly well, but accusations were being leveled at me and I felt that irrelevant objections were sidelining a major policy discussion that would have far-reaching consequences. I repeatedly stated that if turning PC off was what it would take to get the conversation back on track that we should just do it. That wasn't good enough for some users, and a new third phase was created whose sole purpose was to discuss the temporary use of the tool. I admit that I began to make some intemperate remarks and some foul language crept into my conversation. I was frustrated with Wikipedia for the first time in years. My third phase was put on hold while the other issue was being resolved. By now the RFC had been open for 45 days.

There's a lot more I could say about what happened next, but it is all there in the archives for those who want the details. Eventually I decided I'd had enough: too much time was being spent debating my alleged motivations as opposed to the actual issues, and I quit the process—a process I'd initiated with the simple intention of answering one question. I un-watchlisted the related pages and haven't looked at them again until now. A couple of users expressed concern that I might quit Wikipedia altogether, and I re-assured them that I was just sick of the tactics used in the debate, and didn't want to be part of it anymore. The RFC was finally closed on May 27, 101 days after I opened it. In the end, all that happened was that PC was "temporarily" taken out of use, the same way it was temporarily turned on. It's still there, we just aren't allowed to use it until we finally answer that "big question" I set out to answer back in February. Nothing more substantive than that was decided. There's still no policy on PC. For all that effort, we failed to achieve the primary goal of deciding whether or not to use the tool, although, after a poll, it was eventually removed from all pages on which it was still being used.

When I got the discussion about PC going, I saw it as my opus, my great contribution to Wikipedia's policy structure. Whether PC was kept or not, we would finally have a policy on it one way or the other after many years of debate. Although I admit I had a preferred outcome, what I wanted most, what Wikipedia needed most, was a yes or no answer. I dedicated many hours to organizing the debate and engaging in discussion. In the end it was a bitter disappointment that accomplished nothing. There seems inevitably to come a point in any such attempt where there are simply too many voices, too many nonsensical objections, too much petty bickering to get anything done. This is a growing, systemic problem at Wikipedia, and eventually we are going to have to deal with it.

When people talk to me about Wikipedia I always tell them that the best thing about it and the worst thing about are the same thing. The consensus-based decision-making model works in a lot of cases, but sometimes it fails us because there are no controls. Nobody was able to keep this process moving in a forward direction once those who wanted to discuss a different issue had derailed it. Perhaps, when the tool has been switched off for long enough, we can look at this again and try to answer that one question without the psychological barrier of its simultaneous non-consensual operation. When that day comes, I'll be happy to be on the other side of the fence as a participant, not the guy trying to be the ringmaster of an out-of-control circus.

NOTE:click here to see this piece as it was originally submitted

Reader comments

To celebrate the start of the US Open this week, we decided to hit some questions back and forth with WikiProject Tennis. From the project's inception in September 2006, WikiProject Tennis has grown to include over 17,000 pages including five pieces of Featured content and eight Good and A-class articles. The project is home to several sub-projects ranging from the Grand Slam Project to the Tournament Task Force. The project maintains the Tennis Portal and seeks to fulfill a list of goals. We exchanged volleys with project members Sellyme and Totalinarian.

What motivated you to join WikiProject Tennis? Are you a fan of a particular tennis player?

How does the project handle the notability of tennis players? Have you had to deal with any editors creating articles for non-notable players?

Like many sports-related projects, WikiProject Tennis often deals with biographies of living people. Does this place any extra burden on the project? How frequently do BLP issues arise for tennis players?

The project has a very active talk page. What brings tennis enthusiasts together? What tips would you give to projects that struggle to foster discussion?

The Tournament Task Force was created this summer. What are the task force's goals? What resources are needed to reach those goals?

What are the project's most pressing needs? How can a new contributor help today?

Next week we'll find a faster way to commute to Westminster. Until then, take the tube over to the archive.

Reader comments

Three featured articles were delisted:

Three lists were promoted:

Eleven images were promoted. Medium-sized images can be viewed by clicking on "nom":

No cases were opened or closed this week. Four cases remain open:

This week, bugmeister Mark Hershberger coined the term "bugosphere" to describe "the microcosm that evolves around a particular instance of Bugzilla" such as the MediaWiki Bugzilla. In this edition of What is?, we look at the processes and procedures underlying the Wikimedia bug reporting system (in Bugzilla terms, a 'bug' may be a problem with the existing software or a request for features to be added in future versions, which may also be referred to as an 'enhancement' when differentiation is desired).

Bug #1 was filed on 10 August 2004; as of time of writing, 30602 bugs have been submitted. Of those, approximately twenty-four thousand have been closed, whilst six thousand are still open (about 60 percent of which are requests for enhancements). Not all bugs related to Wikimedia wikis; the MediaWiki Bugzilla collates reports from all users of the software, in addition to bug reports that do not relate to MediaWiki but instead relate to Wikimedia websites. In any given week, approximately 90 bugs will be opened, and approximately 80 closed (in extraordinary weeks, such as bug sprints, as many as 65 extra bugs may be closed). As such, Bugzilla serves as central reference for monitoring what has been done, and what still needs doing.

Registration on Bugzilla is free but necessary (logins are not shared between Bugzilla and Wikimedia wikis for many reasons, including the increased visibility of email addresses on Bugzilla). Anyone may comment on bugs; comments are used principally to add details to bug reports, or suggestions on how they should be fixed. Voting in support of a bug is possible, but in general bugs are worked on by priority, or by area of expertise; few "critical"-rated bugs remain long enough to accumulate many votes. In January this year, the Foundation appointed Mark Hershberger as bugmeister, responsible for monitoring, prioritising and processing bug reports. More recently, he has been organising a series of "triages", when bugs are looked at and recategorised depending on their progress and severity. To file a bug or feature request, visit http://bugzilla.wikimedia.org, though it is usual to demonstrate a consensus before filing a request for a controversial feature or configuration change.

Not all fixes may have gone live to WMF sites at the time of writing; some may not be scheduled to go live for many weeks.

{{{publisher}}} and {{{work}}} parameters of citation templates, and to remove flags from certain infoboxes.